Arbitrary tweets made by TheGingerDog (i.e. David Goodwin) up to 30 August 2011

Continue reading “Automated twitter compilation up to 30 August 2011”

Linux, PHP, geeky stuff … boring man.

Arbitrary tweets made by TheGingerDog (i.e. David Goodwin) up to 30 August 2011

Continue reading “Automated twitter compilation up to 30 August 2011”

Arbitrary tweets made by TheGingerDog (i.e. David Goodwin) up to 27 July 2011

Continue reading “Automated twitter compilation up to 27 July 2011”

The SlimFramework is a ‘minimal’ PHP5 framework. We’re using it in one project, integrating with Smarty, Propel and the Zend Framework (as I don’t like Zend_View, it didn’t seem worth using Zend_Controller_Action, although what we do have is very similar to one).

Anyway, when creating your front controller in Slim, you can define ‘middleware‘ (i.e. call back functions) which are executed when a route matches and runs – before your actual ‘controller’ code.

So for example, a simple route would look like :

Slim::get('/route/path/to/match', function() { echo "some output goes here"; }; );

With additional ‘middleware’ it could look like :

Slim::get('/routeh/path/to/match', $caching_middleware, $authentication_check, function() { echo "some output goes here";}; );

(Obviously in a real application you wouldn’t have echo statements in the front controller class)

The $caching_middleware could look like :

$cache_it = function () {

$cache = Zend_Cache::factory('Page', 'File',

array('debug_header' => true,

'default_options' => array('cache' => true,

'cache_with_get_parameters' => false,

'cache_with_session_variables' => true,

'cache_with_cookie_variables' => true),

));

$cache->start();

};

(I’ve left the debug_header in, so it’s obvious when it’s working 🙂 ).

And $authentication_check is another call back – this time presumably checking $_SESSION for something….

5-6 weeks ago ago I ordered two 24″ widescreen monitors from EBuyer – when the ParcelForce guy delivered them I told him I expected 2, and there was only one delivered. He walked off. I presumed that the other would arrive the next day or something… but it didn’t.

On seeing JailBreakMe has released a new version – which allows me to upgrade to the 4.3.3 firmware – I thought I’d update my iPhone4 – but annoyingly kept getting the following error message (whether doing an update or a full restore) :

“The iphone could not be restored an unknown error occurred 1013”

In my case, the fix was to edit /etc/hosts (OSX) and comment out / remove an entry for :

74.208.10.249 gs.apple.com

I have a feeling this was put there by TinyUmbrella or something, but nevermind. It works now.

$customer uses Zend_Cache in their codebase – and I noticed that every so often a page request would take ~5 seeconds (for no apparent reason), while normally they take < 1 second …

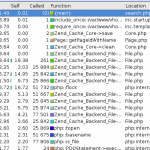

Some rummaging and profiling with xdebug showed that some requests looked like :

Note how there are 25,000 or so calls for various Zend_Cache_Backend_File thingys (fetch meta data, load contents, flock etc etc).

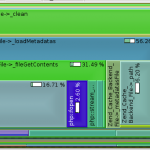

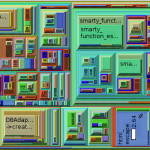

This alternative rendering might make it more clear – especially when compared with the image afterwards :

while a normal request should look more like :

Zend_Cache has a ‘automatic_cleaning_mode’ frontend parameter – which is by default set to 10 (i.e. 10% of all write requests to the cache result in it checking if there is anything to garbage collect/clean). Since we’re nearly always writing something to the cache, this results in 10% of requests triggering the cleaning logic.

See http://framework.zend.com/manual/en/zend.cache.frontends.html.

The cleaning is now run via a cron job something like :

$cache_instance->clean(Zend_Cache::CLEANING_MODE_OLD);

Arbitrary tweets made by TheGingerDog (i.e. David Goodwin) up to 05 July 2011

Continue reading “Automated twitter compilation up to 05 July 2011”

Arbitrary tweets made by TheGingerDog (i.e. David Goodwin) up to 20 June 2011

Continue reading “Automated twitter compilation up to 20 June 2011”

One customer of ours, has a considerable amount of content which is loaded from third parties (generally adverts and tracking code). To the extent that it takes some time for page(s) to load on their website. On the website itself, there’s normally just a call to a single external JS file – which once included includes a lot of additional stuff (flash players, videos, adverts, tracking code etc etc).

On Tuesday night, I was playing catchup with the PHPClasses podcast, and heard about their ‘unusual’ optimisations – which involved using a loader class to pull in JS etc after the page had loaded. So, off I went to phpclasses.org, and found contentLoader.js (See it’s page – here).

Implementation is relatively easy, to the extent of adding the loader into the top of the document and “rewriting” any existing script tags/content so they’re loaded through the contentLoader.

<script src="/wp-content/themes/xxxx/scripts/contentLoader.js" type="text/javascript"></script> <script type="text/javascript"> var cl = new ML.content.contentLoader(); // uncomment, debug does nothing for me, but the delayedContent one does. //cl.debug = true; //cl.delayedContent = '<div><img src="/wp-content/themes/images/loading-image.gif" alt="" width="24" height="24" /></div>'; </script>

And then adding something like the following at the bottom of the page :

<script type="text/javascript"> cl.loadContent(); </script>

And, then around any Javascript you want to delay loading until after the page is ready, use :

<script>

cl.addContent({

content: '' +'' + '<' + 'script type="text/javascript"' + ' src="http://remote.js/blah/blah.js" />' ,

inline: true,

priority: 50

});

</script>

You can control the priority of the loading – lower numbers seem to be loaded first. You can also specify a height:/width: within the addContent – but I’m not sure these work.

For all I know there may be many other similar/better mechanisms to achieve the same – I’m pretty ignorant/clueless when it comes to Javascript. It’s a bit difficult for me to test it – as I have a fairly quick net connection – however it seems to move content around when looking at the net connections from FireBug, so I think it’s working as expected.

If you’ve updated your varnish server’s configuration, there doesn’t seem to be an equivalent of ‘apachectl configtest’ for it, but you can do :

varnishd -C -f /etc/varnish/default.vcl

If everything is correct, varnish will then dump out the generated configuration. Otherwise you’ll get an error message pointing you to a specific line number.