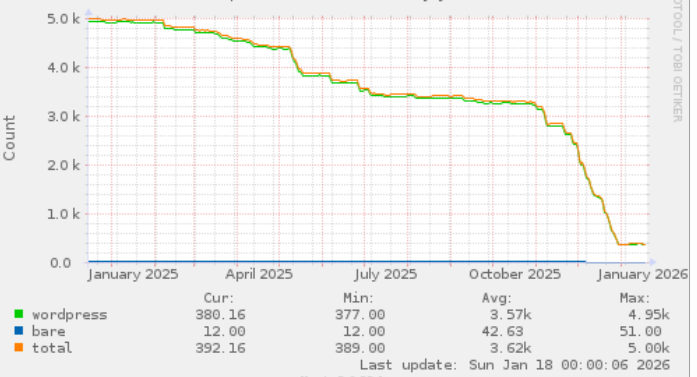

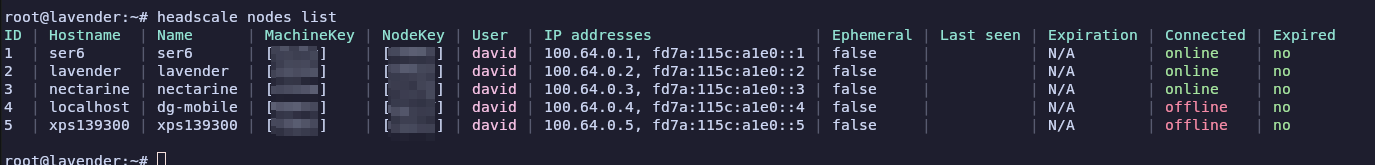

So, I’ve been using headscale for the last few months, combined with a cheap low spec VM from MythicBeasts.com (as my VPN “server” or at least exit node).

Recently, we decided to ditch using bastion ssh (jump) hosts at work, and move to use a VPN instead. This saves us from having a VM running ssh from listening for inbound connections.

Then I wondered how I could access both the work and my home tailscale networks from my laptop etc.

Initially I came across a blog/article discussing how to access two tailscale networks at once, which involved using linux network namespaces and adding various iptables rules etc. I sort of had a go, but it didn’t seem to want to work and it felt like it was going cause me trouble.

So I thought I’d probably have to keep switching tailscale networks somehow (e.g. tailscale down ; tailscale up ….–server … etc ). But this means I need to keep approving the it on the headscale side etc.

Then I saw there’s a ‘tailscale switch’ command ….

# tailscale switch --list ID Tailnet Account 0101 my.headscale.server david* 1010 some-label david.goodwin@work.corp

and switching is just a “tailscale switch some-label” or “tailscale switch my.headscale.server”

That’s a bit easier than having to reauthenticate with the appropriate tailscale network etc.