I’ve had cron’ed unit tests running for ages which happily spam me when stuff breaks – and likewise adding e.g. phpdoc generation and so on into the mix wouldn’t be too hard.

Anyway, for want of something better to do with my time I thought I’d look into CI in a bit more depth for one customer’s project. As some background, we’ve maintained their software for about the last 12-18 months, the project is largely procedural – although we’re introducing Propel, Zend Framework, Smarty etc into the mix slowly over time. We’ve also added a number of unit tests to try and keep some of the pain points in the project under control.

So, there’s the background.

With regards to CI within a PHP environment there seem to be three options:

- phpUnderControl

- Xinc

- Hudson

To the best of my knowledge 1 & 3 require Tomcat, and therefore are Java based. I thought I’d try and make my life easy and stick with Xinc which is written in PHP (and perhaps therefore something I can hack/patch/modify if needs be).

In retrospect I’m questioning whether I made the right choice – Xinc seems to be unmaintained and unloved at the moment.

Xinc Installation

It should be the case of doing something easy like :

pear channel-discover pear.xinc.eu

pear install xinc/xinc

Unfortunately, the Xinc project seems a little unloved as of late, and it’s necessary to use an unofficial mirror :

pear channel-discover pear.ctrl-zetta.com

pear install ctrl-zetta/Xinc

(This required rummaging through Xinc’s issue log… *sigh*).

Follow the instructions and it’s not really difficult to install. There’s no requirement for a database or anything.

Once installed, edit /etc/xinc/config.xml and comment out the <project>…</project> block and instead only edit /etc/xinc/conf.d/whatever.xml – in my case I just copied the skeleton one and added in stuff… giving something like the following project.xml

In a nutshell, this says:

- Run from /var/www/xinc/whatever.test.palepurple.co.uk

- Every 900 seconds rebuild using what’s defined in the <builders> tag

- Always build (hence <buildalways/>) – in reality, you’d probably want the <svn directory=${dir}” update=”true”/> enabled so rebuilds only occur if someone’s changed svn.

- Once the build is complete, publish the php docs (found in ${dir}/apidocs)

- Once the build is complete report the results of the unit tests using ${dir}/report/logfile.xml – obviously this path needs to match up with what’s in your phing build.xml file.

- If a build fails, email root

- If a build succeeds after a failure, email root

- When a build succeeds, run the publish.xml file through phing (target: build) – this is used to create a .tar.gz with appropriate numbering which appears within Xinc’s web ui for download.

Obviously in my case, this didn’t get me very far initially as the project wasn’t using phing.. so that was task #2.

Phing

I had a few issues once I started to phing-ise things – firstly, I’ve always historically used SimpleTest as my unit test framework of choice – unfortunately it’s phing and Xinc integration isn’t all that good – and phpUnit is clearly superior in this respect. So I quickly converted out tests from SimpleTest to phpUnit – thankfully this wasn’t too hard as all my tests extent a local class (LocalTest) (hence the exclude line in the build.xml file below) to which I just added a few aliasing methods in so all the PhpUnit/SimpleTest method name differences (e.g. assertEqual($x,$y) and assertEquals($x, $y)) were handled along with crude mimicking of some of SimpleTest’s web_tester functionality.

Anyway, once that was done, it was pretty easy to pinch various bits of config from everywhere and get something like the following build.xml file – when Xinc runs it defines a few properties – so I’ve added in a couple of lines to the build.xml to ensure that these properties are set to something (incase I’m running phing from the command line and not via Xinc).

In my case it was necessary to explicitly exclude e.g. the Zend Framework or ezComponents from various tasks (e.g. phpdoc api generation and code sniffing). In this project’s case the code for each is explicitly within the hierarchy – as opposed to being a system wide include.

Running a particular ‘target’ is just be a case of doing ‘phing -f build.xml tests’ (for instance). phing will default to using ‘build.xml’, so the ‘-f build.xml’ is redundant.

Firing up Xinc (/etc/init.d/xinc start) and tail’ing /var/log/xinc.log give me a good idea of what was going on, and eventually with a bit of prodding I got it all working.

I then thought I ought to integrate test code coverage reports – as they’d be a useful addition and something I can point the customer to – at this point I discovered I needed to hack phing a little to get it to work with phpUnit’s xml output format to create the code coverage report. A patch of what’s needed should be here but phing.info has been down for the last few days… so manually :

In /usr/share/php/phing:

Edit: tasks/ext/phpunit/formatter/XMLPHPUnitResultFormatter.php and change

$this->logger = new PHPUnit_Util_Log_JUnit(null, true) to

$this->logger = new PHPUnit_Util_Log_XML(null, true);

And change the require_once() call at the top of the file to become require_once ‘PHPUnit/Util/Log/XML.php’).

No doubt the above won’t be required once a new release of phing is made – I’m running v2.4.1.

And, if your code has an implicit dependency on various variables being global – and they’re not implicitly declared as global within an include file – it will fail and look like phpunit is trampling on globals; it’s not. Just edit the include file and be explicit with respect to global definition. You will probably need to tell phpUnit to not serialise globals between test calls as some variables ( e.g. a PDO connection) can’t be serialised…. this can be done by setting a property within your test class(es) called backupGlobals to false.

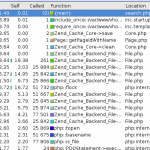

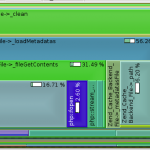

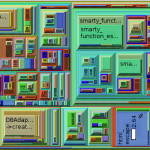

And, if everything works well, you’ll see something like the attached screenshot [[Screenshot 1]]

Summary

Xinc appears unmaintained; patching of it is probably required, but it does appear to work.

I’m glad I’ve finally started to use phing – I can see it being of considerable use in future projects when we have to deploy via FTP or something.