Arbitrary tweets made by TheGingerDog up to 01 March 2015

Continue reading “Automated twitter compilation up to 01 March 2015”

Linux, PHP, geeky stuff … boring man.

PHP stuff

Arbitrary tweets made by TheGingerDog up to 01 March 2015

Continue reading “Automated twitter compilation up to 01 March 2015”

I needed to add some more file types for ack-grep to find / search when I’m looking for PHP code that resides in files with non-standard extensions (e.g. something.def, something.inc etc).

Continue reading “ack-grep config – ackrc – adding new file types”

Arbitrary tweets made by TheGingerDog up to 08 February 2015

Continue reading “Automated twitter compilation up to 08 February 2015”

Arbitrary tweets made by TheGingerDog up to 25 January 2015

Continue reading “Automated twitter compilation up to 25 January 2015”

Arbitrary tweets made by TheGingerDog up to 14 December 2014

Continue reading “Automated twitter compilation up to 14 December 2014”

So, I think I’ve changed ‘editor’. Perhaps this is a bit like an engineer changing their calculator or something.

For the last 10 years, I’ve effectively only used ‘vim‘ for development of any PHP code I work on.

I felt I was best served using something like vim – where the interface was uncluttered, everything was a keypress away and I could literally fill my entire monitor with code. This was great if my day consisted of writing new code.

Unfortunately, this has rarely been the case for the last few years. I’ve increasingly found myself dipping in and out of projects – or needing to navigate through a complex set of dependencies to find methods/definitions/functions – thanks to the likes of PSR0. Suffice to say, Vim doesn’t really help me do this.

Perhaps, I’ve finally learnt that ‘raw’ typing speed is not the only measure of productivity – navigation through the codebase, viewing inline documentation or having a debugger at my fingertips is also important.

So, last week, while working on one project, I eventually got fed up of juggling between terminals and fighting with tab completion that I re-installed netbeans – so, while I’m sure vim can probably do anything netbeans can – if you have the right plugin installed and super flexible fingers.

So, what have I gained/lost :

x – Fails with global variables on legacy projects though – in that netbeans doesn’t realise the variable has been sucked in through a earlier ‘require’ call.

I did briefly look at sublime a few weeks ago, but couldn’t see what the fuss was about – it didn’t seem to do very much – apart from have multiple tabs open for the various files I was editing.

I feel like I’ve spent most of this week debugging some PHP code, and writing unit tests for it. Thankfully I think this firefighting exercise is nearly over.

Perhaps the alarm bells should have been going off a bit more in my head when it was implied that the code was being written in a quick-and-dirty manner (“as long as it works….”) and the customer kept adding in additional requirements – to the extent it is no longer clear where the end of the project is.

“It’s really simple – you just need to get a price back from two APIs”

then they added in :

“Some customers need to see the cheapest, some should only see some specific providers …”

and then :

“We want a global markup to be applied to all quotes”

and then :

“We also want a per customer markup”

and so on….

And the customer didn’t provide us with any verified test data (i.e. for a quote consisting of X it should cost A+B+C+D=Y).

The end result is an application which talks to two remote APIs to retrieve quotes. Users are asked at least 6 questions per quote (so there are a significant number of variations).

Experience made me slightly paranoid about editing the code base – I was worried I’d fix a bug in one pathway only to break another. On top of which, I initially didn’t really have any idea of whether it was broken or not – because I didn’t know what the correct (£) answers were.

Anyway, to start with, it was a bit like :

Now:

Interesting things I didn’t like about the code :

$customer uses Zend_Cache in their codebase – and I noticed that every so often a page request would take ~5 seeconds (for no apparent reason), while normally they take < 1 second …

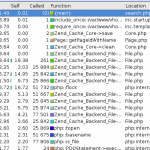

Some rummaging and profiling with xdebug showed that some requests looked like :

Note how there are 25,000 or so calls for various Zend_Cache_Backend_File thingys (fetch meta data, load contents, flock etc etc).

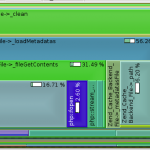

This alternative rendering might make it more clear – especially when compared with the image afterwards :

while a normal request should look more like :

Zend_Cache has a ‘automatic_cleaning_mode’ frontend parameter – which is by default set to 10 (i.e. 10% of all write requests to the cache result in it checking if there is anything to garbage collect/clean). Since we’re nearly always writing something to the cache, this results in 10% of requests triggering the cleaning logic.

See http://framework.zend.com/manual/en/zend.cache.frontends.html.

The cleaning is now run via a cron job something like :

$cache_instance->clean(Zend_Cache::CLEANING_MODE_OLD);

Recently I’ve been trying to cache more and more stuff – mostly to speed things up. All was well, while I was storing relatively small numbers of data – because (as you’ll see below) my approach was a little flawed.

Random background – I use Zend_Cache, in a sort of wrapped up local ‘Cache’ object, because I’m lazy. This uses Zend_Cache_Backend_File for storage of data, and makes sure e.g. different sites (dev/demo/live) have their own unique storage location – and also that nothing goes wrong if e.g. a maintenance script is run by a different user account.

My naive approach was to do e.g.

$cached_data = $cache->load('lots_of_stuff');

if(!empty($cached_data)) {

if(isset($cached_data[$key])) {

return $value;

}

}

else {

// calculate $value

$cached_data[$key] = $value;

$cache->save($cached_data, $cache_key);

}

return $value;

The big problem with this is that the $cached_data array tends to grow quite large; and PHP spends too long unserializing/serializing. The easy solution for that is to use more than one cache key. Problem mostly solved.

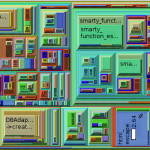

However, if the site is performing a few thousand calculations, speed of [de]serialisation is still gong to be an issue – even if the data involved is in small packets. I’d already profiled the code with xdebug/kcachegrind and could see PHP was spending a significant amount of time performing serialisation – and then remembered a presentation I’d seen (http://ilia.ws/files/zendcon_2010_hidden_features.pdf – see slides 14/15/16 I think) at PHPBarcelona covering Igbinary (https://github.com/phadej/igbinary)

Once you install the extension –

phpize ./configure make cp igbinary.so /usr/lib/somewhere #add .ini file to /etc/php5/conf.d/

You’ll have access to igbinary_serialize() and igbinary_unserialize() (I think ‘make install’ failed for me, hence the manual cp etc).

I did a random performance test based on this and it seems to be somewhat quicker than other options (json_encode/serialize) – this was using PHP 5.3.5 on a 64bit platform. Each approach used the same data structure (a somewhat nested array); the important things to realise are that igbinary is quickest and uses less disk space.

JSON (json_encode/json_decode):

Native PHP :

Igbinary :

The performance testing bit is related to this Stackoverflow comment I made on what seemed a related post

Everyone else probably already knows this, but $project is/was doing two queries on the MySQL database every time the end user typed in something to search on

This is all very good, until there is sufficiently different logic in each query that when I deliberately set the offset in query #1 to 0 and limit very high and find that the of rows returned by both doesn’t match (this leads to broken paging for example)

Then I thought – surely everyone else doesn’t do a count query and then repeat it for the range of data they want back – there must be a better way… mustn’t there?

At which point I found:

http://forge.mysql.com/wiki/Top10SQLPerformanceTips

and

http://dev.mysql.com/doc/refman/5.0/en/information-functions.html#function_found-rows

See also the comment at the bottom of http://php.net/manual/en/pdostatement.rowcount.php which gives a good enough example (Search for SQL_CALC_FOUND_ROWS)

A few modifications later, run unit tests… they all pass…. all good.

I also found some interesting code like :

$total = sizeof($blah);

if($total == 0) { … }

elseif ($total != 0) { …. }

elseif ($something) { // WTF? }

else { // WTF? }

(The WTF comment were added by me… and I did check that I wasn’t just stupidly tired and not understanding what was going on).

The joys of software maintenance.