I have a legacy virtual machine scale set which was not created with Encryption at Host and with Trusted Launch.

Continue reading “Azure – moving a virtual machine scale set to have trusted launch and encryptionAtHost”

It’s always DNS …. (unbound / domain signing stuff)

Yesterday, I spent most of my day wondering what was wrong with my unbound configuration…. as a TL;DR, if you’re creating a new TLD (e.g. foo.lan) you may need to disable DNSSEC checks on it within unbound’s config using the ‘domain-insecure‘ setting –

Continue reading “It’s always DNS …. (unbound / domain signing stuff)”

Initial foray into Terraform / OpenTofu

So over the last couple of weeks at work, I’ve been learning to use Terraform (well OpenTofu) to help us manage multiple deployments in Azure and AWS.

The thought being that we can have a single ‘plan’ of what a deployment should look like, and deviations will be spotted / can be alerted on.

I was tempted to try and write a contrived article showing how you could create a VM in AWS (or Azure) using Terraform, but I’m not sure I’ve got anything to add over the 101 other articles on the internet.

Vaguely useful things :

- The tofu configuration is much quicker to write than e.g. trying to talk to AWS using it’s SDK (something I did do about 7-8 years ago)

- You can split the config up into multiple .tf files within your working directory, the tool just merges them all together at run time

- Having auto-complete in an editor is pretty much necessary (in my case, PHPStorm)

- tofu is quite quick to run – it doesn’t take all that long to check the state of the known resources and the config files, which is good; unfortunately Azure often takes sometime to do something on its end…

- I’ve yet to see any point in writing a module to try and encapsulate any of our configuration as I can’t see any need to re-use bits anywhere

I’m not sure how we’re going to go about reconciling our legacy (production) environment with a newer / shiny one built with tofu though.

LetsEncrypt + Azure Keyvault + Application gateway

A few years ago I setup an Azure Function App to retrieve a LetsEncrypt certificate for a few $work services.

Annoyingly that silently stopped renewing stuff.

Given I’ve no idea how to update it etc or really investigate it (it’s too much of a black box) I decided to replace it with certbot etc, hopefully run through a scheduled github action.

To keep things interesting, I need to use the Route53 DNS stuff to verify domain ownership.

Random bits :

docker run --rm -e AWS_ACCESS_KEY_ID="$AWS_ACCESS_KEY_ID" \

-e AWS_SECRET_ACCESS_KEY="$AWS_SECRET_ACCESS_KEY" \

-v $(pwd)/certs:/etc/letsencrypt/ \

-u $(id -u ${USER}):$(id -g ${USER}) \

certbot/dns-route53 certonly \

--agree-tos \

--email=me@example.com \

--server https://acme-v02.api.letsencrypt.org/directory \

--dns-route53 \

--rsa-key-size 2048 \

--key-type rsa \

--keep-until-expiring \

--preferred-challenges dns \

--non-interactive \

--work-dir /etc/letsencrypt/work \

--logs-dir /etc/letsencrypt/logs \

--config-dir /etc/letsencrypt/ \

-d mydomain.example.comAzure needs the rsa-key-size 2048 and type to be specified. I tried 4096 and it told me to f.off.

Once that’s done, the following seems to produce a certificate that keyvault will accept, and the load balancer can use, that includes an intermediate certificate / some sort of chain.

cat certs/live/mydomain.example.com/{fullchain.pem,privkey.pem} > certs/mydomain.pem

openssl pkcs12 -in certs/mydomain.pem -keypbe NONE -cetpbe NONE -nomaciter -passout pass:something -out certs/something.pfx -export

az keyvault certificate import --vault-name my-azure-vault -n certificate-name -f certs/something.pfx --password something

Thankfully that seems to get accepted by Azure, and when it’s applied to an application gateway listener, clients see an appropriate chain.

Resizing a VM’s disk within Azure

Random notes on resizing a disk attached to an Azure VM …

Check what you have already –

az disk list --resource-group MyResourceGroup --query '[*].{Name:name,Gb:diskSizeGb,Tier:accountType}' --output table

might output something a bit like :

Name Gb

———————————————- —-

foo-os 30

bar-os 30

foo-data 512

bar-data 256

So here, we can see the ‘bar-data’ disk is only 256Gb.

Assuming you want to change it to be 512Gb (Azure doesn’t support an arbitary size, you need to choose a supported size…)

az disk update --resource-group MyResourceGroup --name bar-data --size-gb 512

Then wait a bit …

In my case, the VMs are running Debian Buster, and I see this within the ‘dmesg‘ output after the resize has completed (on the server itself).

[31197927.047562] sd 1:0:0:0: [storvsc] Sense Key : Unit Attention [current]

[31197927.053777] sd 1:0:0:0: [storvsc] Add. Sense: Capacity data has changed

[31197927.058993] sd 1:0:0:0: Capacity data has changed

Unfortunately the new size doesn’t show up straight away to the O/S, so I think you either need to reboot the VM or (what I do) –

echo 1 > /sys/class/block/sda/device/rescan

at which point the newer size appears within your ‘lsblk‘ output – and the filesystem can be resized using e.g. resize2fs

Traefik + Azure Kubernetes

Just a random note or two …

At work we moved to use Azure for most of our hosting, for ‘reasons’. We run much of our workload through kubernetes.

The Azure portal has a nice integration to easily deploy a project from a github repo into Kubernetes, and when it does, it puts each project in it’s own namespace.

In order to deploy some new functionality, I finally bit the bullet and tried to get some sort of Ingress router in place. I chose to use Traefik.

Some random notes ….

- You need to configure/run Traefik with –providers.kubernetescrd.allowCrossNamespace=true, without this it’s not possible for e.g. Traefik (in the ‘traefik’ namespace) to use MyCoolApi in the ‘api’ namespace. The IngressRoute HAS to be in the same namespace as traefik is running in …. and the IngressRoute needs to reference a service in a different namespace…

- While you’re poking around, you probably want to load traefik with –log.level=DEBUG

- Use cert-manager for LetsEncrypt certificates (see https://www.andyroberts.nz/posts/aks-traefik-https/ for some details)

- You need to make sure you’re using a fairly recent Kubernetes variant – ours was on 1.19.something, which helpfully just silently”didn’t work” when trying to get the cross namespace stuff working.

- Use k9s as a quick way to view logs/pods within the cluster.

Example Ingress Route

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

namespace: traefik

name: projectx-ingressroute

annotations:

kubernetes.io/ingress.class: traefik

cert-manager.io/cluster-issuer: my-ssl-cert

spec:

entryPoints:

- websecure

routes:

- kind: Rule

match: Host(`mydomain.com`) && PathPrefix(`/foo`)

services:

- name: foo-api-service

namespace: foo-namespace

port: 80

tls:

secretName: my-ssl-cert-tls

domains:

- main: mydomain.com

Initially I tried to use traefik’s inbuilt LetsEncrypt provider support; and wanted to have a shared filesystem (azure storage, cifs etc) so multiple Traefik replicas could both share the same certificate store…. unfortunately this just won’t work, as the CIFS share gets mounted with 777 perms, which Traefik refuses to put up with.

AWS vs Azure … round 1, fight!

So, for whatever reason, I need to move some virtual machines and things from AWS (EC2, RDS), to an Azure. I have a few years experience with AWS, but until recently I’ve not really used Azure ….

Here are some initial notes……

- AWS tooling feels more mature (with the ‘stock’ ansible that ships with Ubuntu 20.10, I’m not able to create a virtual machine in Azure without having python module errors appear)

- AWS EBS disks are more flexible – I can enlarge and/or change their performance profile at runtime (no downtime). With Azure, I have to shutdown the server before I can change them.

- AWS SSL certificates are better (for Azure I had to install a LetsEncrypt application and integrate it with my DNS provider ( e.g. https://github.com/shibayan/keyvault-acmebot ). AWS has it’s certificate service that issues free certs built in, and if the domain is already in Route53 there’s hardly anything to do.

- Azure gives you more control over availability (with its concept of availability sets, it allows you to have some control over VM placement and order of updates being applied). It also gives Placement Groups – allowing you to influence physical placement of resources to reduce latency etc.

- Azure feels more ‘commercial’ (with the various different third party products appearing in the portal when you search etc).

- Azure has worse support for IPv6 (e.g. if you have a VPN within your Virtual Network you can’t have IPv6).

- Azure doesn’t seem to offer ARM based Virtual Machines and fewer AMD equivalents (see also: EC2 Graviton 2).

- Azure’s pricing feels harder to understand – there’s often a ‘standard’ and ‘premium’ option for most products, but the description of differences is often buried in documentation away from the portal ….. I often see ‘Pricing unavailable’.

- Do I want a premium IP address?

- Do I need Ultra or Premium SSDs or will Standard SSD suffice? Will I be able to change/revert if I’ve chosen the wrong one without deleting and recreating something?

- Why do I need to choose a VPN server SKU?

- Azure networks all have outbound NAT based internet access by default – so even if you’ve not assigned a public IP address to the resource, it can reach out. At the same time, you can also buy a NAT Gateway. If you give a VM a public IP address then it will use that for it’s outbound traffic.

- Azure has a lot of services in ‘preview’ (to me beta). At the time of writing (March 2021), it doesn’t yet offer a production ready ….

- MySQL database service that has zone redundancy (i.e. no real high availability)

- Storage equivalent of EFS (NFS is in preview)

- Azure does provide a working serial console for VMs, which is quite handy when systemd decides to throw a fit on bootup (2021/04/02 – AWS apparently now provides this too!).

- Azure doesn’t let you detach the root volume from a stopped server to mount it elsewhere (e.g. for maintenance to fix something that won’t boot up!).

- When deleting a VM in Azure, it’s necessary to manually delete linked disks. In AWS they can be cleaned up at the same time.

Packer and Azure

I needed to build some Virtual Machine images (using packer) for work the other day.

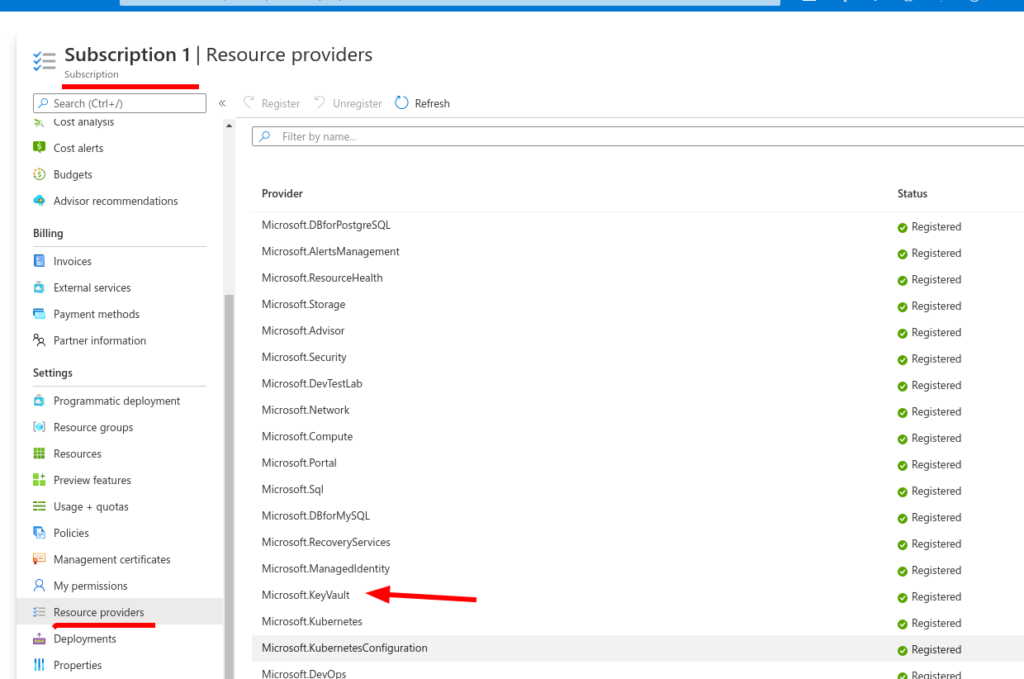

I already have a configuration setup for packer (but for AWS) and when trying to add in support for an ‘azure-arm‘ builder, I kept getting the following error message in my web browser as I attempted to authenticate packer with azure :

“AADSTS650052: The app needs to access to a service (https://vault.azure.net) that your organization \”<random-id>\” has not subscribed or enabled. Contact your IT Admin to review the configuration of your service subscriptions.”

This isn’t the most helpful of error messages, when I’m probably meant to be the “IT Admin”.

After eventually giving in (as I couldn’t find any similar reports of this problem) and reaching out to our contact in Microsoft, it turns out we needed to enable some additional Resource Providers in the Subscription…. and of course the name has to be slightly different 😉 (Microsoft.KeyVault). Oh well….

Having done this, Packer does now work (Hurrah!)

Hopefully this will help someone else in the future.